Serverless Website Architecture

What is serverless?

Serverless technology leverages the idea of using server resources only as needed without setting up a dedicated server.

Serverless technology leverages the idea of using server resources only as needed without setting up a dedicated server.

By using cloud services (e.g., AWS, GCP, Azure, etc.) that provide on-demand compute/storage capabilities, users can now set up websites without the need to purchase a dedicated server that handles website requests.

Despite the name serverless suggests, some machines are responsible for storing the website contents and serving requests. Still, from the physical hardware maintenance/ownership point of view, it’s abstracted away from the serverless user.

Why use serverless web hosting?

Cost benefits

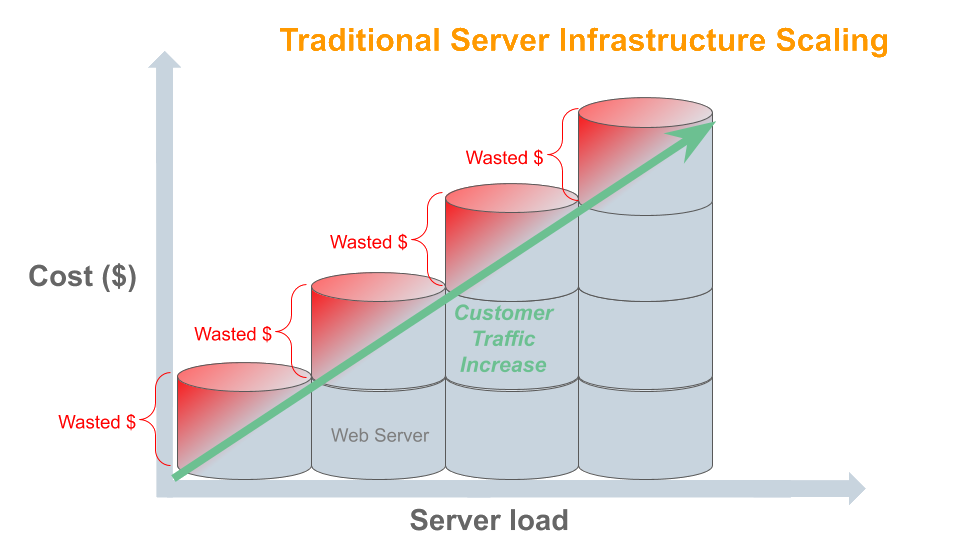

The traditional way of hosting a website requires setting up dedicated servers. As the business grows, the website traffic increases, and at some point, more server (or a bigger server) needs to be added to the webserver fleet. This kind of infrastructure scaling often leads to over-provisioning, which is wasteful.  Some businesses will have peak times. The essential website resources go up and down depending on the day’s time, and serverless provides flexibility to scale up and down as needed.

Some businesses will have peak times. The essential website resources go up and down depending on the day’s time, and serverless provides flexibility to scale up and down as needed.

Scalability

Serverless vendors take care of handling all requests. Regardless of making one vs. 1,000,000 HTTP requests, the underlying serverless service provider will ensure enough resources.

Security

There’s no need to update and maintain web server software manually. In general, the serverless vendors will employ sound security practices to keep the data & its access safe. A serverless website isn’t free from security vulnerabilities. Website owners still need to adopt the best security practices (e.g., setting Content Security Policies on webpages, network encryption using SSL, etc.).

Cons of serverless

Predictability

The Pay-as-you-go pricing model makes it hard to predict the cost of the project. For example, a website could pick up 100x traffic in a few days due to an uptick of related keyword searches on the internet, then it becomes less relevant and loses traffic. This type of website usage leads to volatile billing statements. It’s possible to manually limit the traffic if/when the traffic monitoring threshold does breach, but it’s cumbersome and possibly revenue limiting.

Complexity

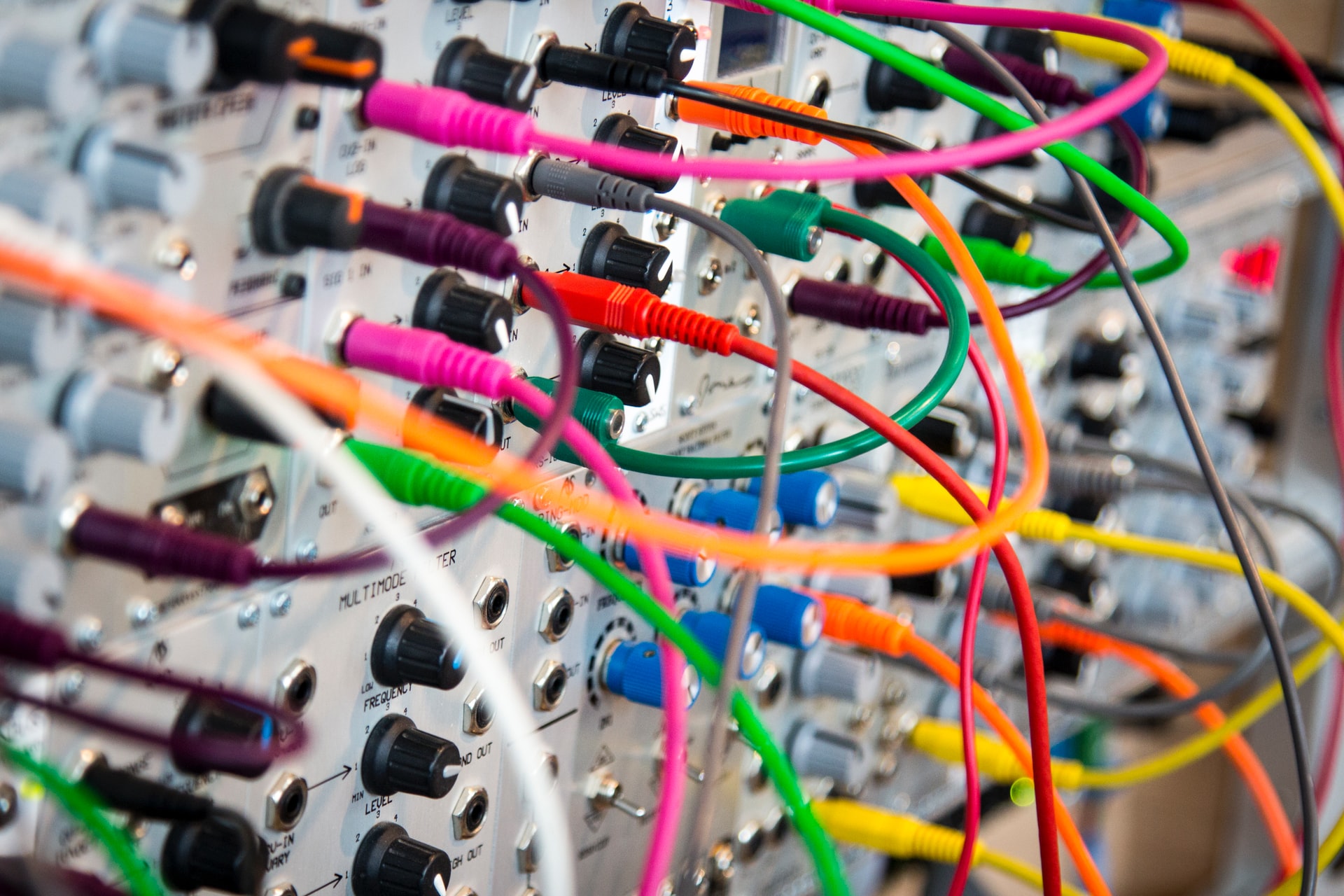

A single server’s responsibility is distributed between numerous modules. For example, a distributed storage service like AWS S3 hosts static files, and a content delivery network such as AWS Cloud Front serves frequently accessed files. It gets even more complicated if you want to add more security features or sophisticated dynamic content rendering capabilities using other serverless compute services like AWS Lambda.

A single server’s responsibility is distributed between numerous modules. For example, a distributed storage service like AWS S3 hosts static files, and a content delivery network such as AWS Cloud Front serves frequently accessed files. It gets even more complicated if you want to add more security features or sophisticated dynamic content rendering capabilities using other serverless compute services like AWS Lambda.

More moving parts means the website/application needs to have additional monitoring and logging in place. If something goes wrong, then the website administrator can quickly identify what needs to be addressed.

Operations

Operations admin, or a team of engineers, need to have expertise over various moving parts. Since the website consists of many moving parts, debugging a problem requires more knowledge and time to resolve. For example, when a website is not serving a recently updated content, then the problem could be anywhere from the static file upload to the content delivery network cache issues.